Not so long ago, developers dismissed testing their code, leaving all the verification to the compiler. The “works on my machine” motto spread as one of the most well-known memes in programming. One of the first verifications that every programmer should perform is a pen test of the algorithm proposed as a solution for a problem, but that’s not enough to be confident about software quality. A constant challenge in programming is the progression from the abstract thought of solutions design to the actual code in a programming language.

Fortunately, quality of software is now part of the main conversation as software products compete against each other to eat the world. This reality has created a series of techniques to test many aspects of software and a toolset that each professional developer should know how and where to use.

In this guide, you will find the most common types of testing that can be added to any software project at any stage. They allow you to evaluate different aspects of code—from an atomic view to the global behavior of a solution—including end-user interaction as well as performance under several scenarios. You will find that unit testing, integration testing, acceptance testing, smoke testing, end-to-end testing, and performance testing each add a layer of quality assurance that increases confidence in software.

Unit Testing

The first and most common kind of test is the kind that verifies single components. This is called unit testing. The idea is to prove that an indivisible unit of logic (usually a single method) works as it is designed to work.

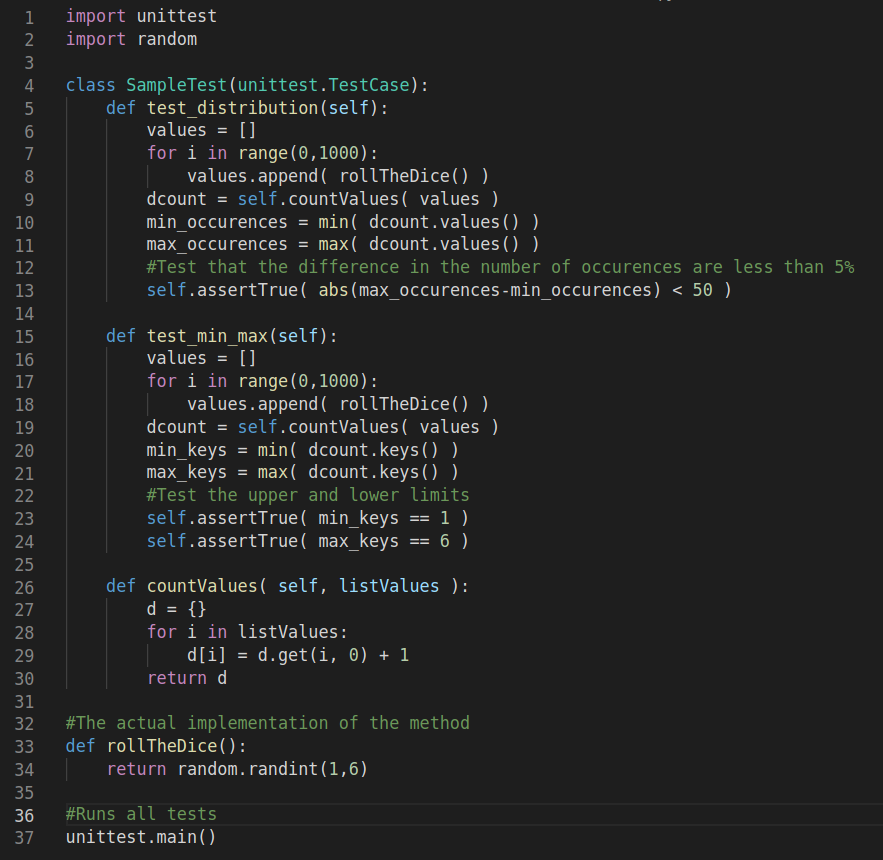

Imagine a software contract to build a method that returns the value of a rolled die. This can be accomplished in a single method (rollTheDice) in most of the high-level programming languages available today—just return a random number, right? Well, even with a trivial use case like this, you should start thinking about how you could test a solution for this problem before writing the actual solution to the problem. This approach is known as test-driven development (TDD).

In this example, you can test that the rolled die is fair, which means that the probability to get a value is exactly the same as the others. Otherwise, the die couldn’t be reliably used in many games. Other tests for this unit of logic could be the lower and upper limits, which should be a number one (1) through six (6), no matter how many times you roll. With these two tests implemented, you will already have a test suite even before you write the actual method.

An important metric about quality of code is how much of the solution code is covered by tests; this is called code coverage. It measures not only that every method is tested, but whether or not all the logical ramifications of a method are tested.

Imagine that now you can pass an argument to our example method that you can use to define the number of sides of the die. Now your test suite should include a test to verify that the value of the argument is at least two (2)—which would be equivalent to tossing a coin—and modify the test about lower and upper limits to cover this new possibility.

Although TDD is an important tool, it can also have some limits. Not all the use cases are so simple that you can isolate them completely from external dependencies. In cases where a method depends on other components, unit testing frameworks provide the concept of mocks, which are fake components that respond exactly in the way that the tested unit requires to execute properly.

As you may know, this isn’t the case in many situations, so even if you create a large stock of test suites, your software can crash in an unexpected way. Testing is basic protection, but may not always be enough.

Integration Testing

When one unit of logic depends on another, you should test the way this interaction works, even if you previously tested that each behaves properly in an individual way. This is called integration testing. This provides a wider view of the expected behavior of a solution, not only paying attention to small chunks of logic but also testing the overall orchestration of several components to provide a solution.

Imagine that there is a global upper limit for the number of sides of a die and that the limit is stored in an external file. Your rollTheDice method should validate the argument of sides of the die against the global value. In this case, you can have a new method (getMaxSides) that reads the external file and returns the global maximum value. Unit tests of the die value method could use a mock that always returns a fixed value as the global maximum.

You should write an integration test that verifies the interaction between methods rollTheDice and getMaxSides and the external file. After all, what happens if the file is not available? What happens if it contains a number lower than two (2) as global maximum?

Integration testing should not be limited to just two components. Usually, a replica of the entire production environment is used to run integration tests.

Acceptance Testing

Up until now, the testing techniques presented take into account only a technical point of view—which is important, but incomplete. How do you know how a subsystem or module in a software project will respond under certain circumstances? What are the criteria that should be applied to a test in order to accept its results as valid or not?

Acceptance testing is a formal definition of the valid response of a system under specific conditions. The idea behind the concept is to set up a scenario, get the result from the system, and evaluate its response against the expectations. Acceptance test results are binary, giving a valid/invalid result for each scenario evaluated.

But how do you create this scenario-action-evaluation in a way that conforms with the high-level requirements?

An interesting approach to tackling this kind of question is behavior-driven development (BDD), a technique in which the QA developers and stakeholders provide a description of the expected behavior of a software solution given a specific scenario before the software is built. This provides context for developers to build the interaction between components in a way that responds with the expected behavior.

Another advantage to BDD is that the tests are usually written in natural language with a common structure, the most common being the Given-When-Then acceptance criteria definition.

Smoke Testing

The central idea of programmatic testing is that its execution can be automated and that you can rely on this automation to execute each time a new version of the software is built. As you can imagine, the amount of work to keep software tested can be as much work as building the software itself.

A common practice in well-organized development teams is to define a core set of tests that each build must pass before releasing a new version. This is called smoke testing.

Each feature of a software solution can have this set of core tests that it must pass to answer the basic question, “Is this feature working?” Also, in order to optimize the testing and building time, a smoke test can include the tests for the new behaviors or fixed bugs included in the version and stop if the smoke test doesn’t work. It doesn’t really matter if 99 percent of the tests pass if the 1 percent related to the main objective of the build fails.

Another objective of smoke testing is to make sure that previously functioning features continue to work properly after the system is changed. This is called regression testing and provides confidence that a software solution’s previous level of quality is not degraded by the addition of new logic or the fix of a bug.

End-to-End Testing

Not all software testing is done with its components or for its backend code. Many systems require interaction with end-users, and usually, this is one of the most important areas to test. This is called end-to-end testing, and it is centered on the user experience with a software system. Reliability or robustness is not enough if the interface is clunky, or if the software is bad at communicating the state or results of an operation.

Given the myriad of devices in which users interact with applications and the fragmentation of implementation of standards (such as HTML in current browsers), end-to-end tests must be automated in order to be run against several clients. Usually, the narrative of a use case or user story is followed and recorded as a sequence of actions to determine the way in which the software responds to the inputs. Then the sequence is translated to a script that can be executed as part of a release cycle.

The benefits of automation for end-to-end testing are pretty clear. It can be paralleled in order to test several platforms at the same time, can be scheduled or be part of a continuous integration/continuous deployment (CI/CD) workflow, and can include tests of different user behaviors or edge use cases with the use of simple parameters.

Performance Testing

Our final type of testing addresses the question, “How will your software respond under heavy load?” Performance testing techniques are developed to put software into expected and unexpected usage loads in order to measure its scalability and the way it uses the resources available.

The load tests simulate the expected number of users that your software should be able to handle with the infrastructure in which it is deployed. Then stress tests take the software out of the expected limits to record the way it responds and handles load over its installed capacity. Does it crash and burn? Does it respond to all the load but degrade the user experience? Does it scale automatically to support the new limits?

A variant of the stress test is the spike test. In this kind of performance testing, the number of simultaneous users increases suddenly and returns to normal levels in short periods of time. The idea is to observe the way that the system recovers after the spike. This is useful to determine the availability window that should be expected in this kind of scenario.

Finally, the soak or endurance test puts a high level of load—but still under the maximum limits—on a system over long periods of time. This can give insights about the amount of data to be processed and the limits of infrastructure or operational costs of software under heavy usage.

Conclusion

Building software that can “eat the world” is not as simple as just coding it and launching it. Quality must be a mindset of each development team, and tests are a great way to apply this mindset to a product.

But keeping a healthy and automated test environment is a lot of work. The best way to think about it is to remember that this work is much cheaper than detecting errors in production environments. The return of investment for these different types of tests is highly positive but requires careful design, good tools, and committed teams.