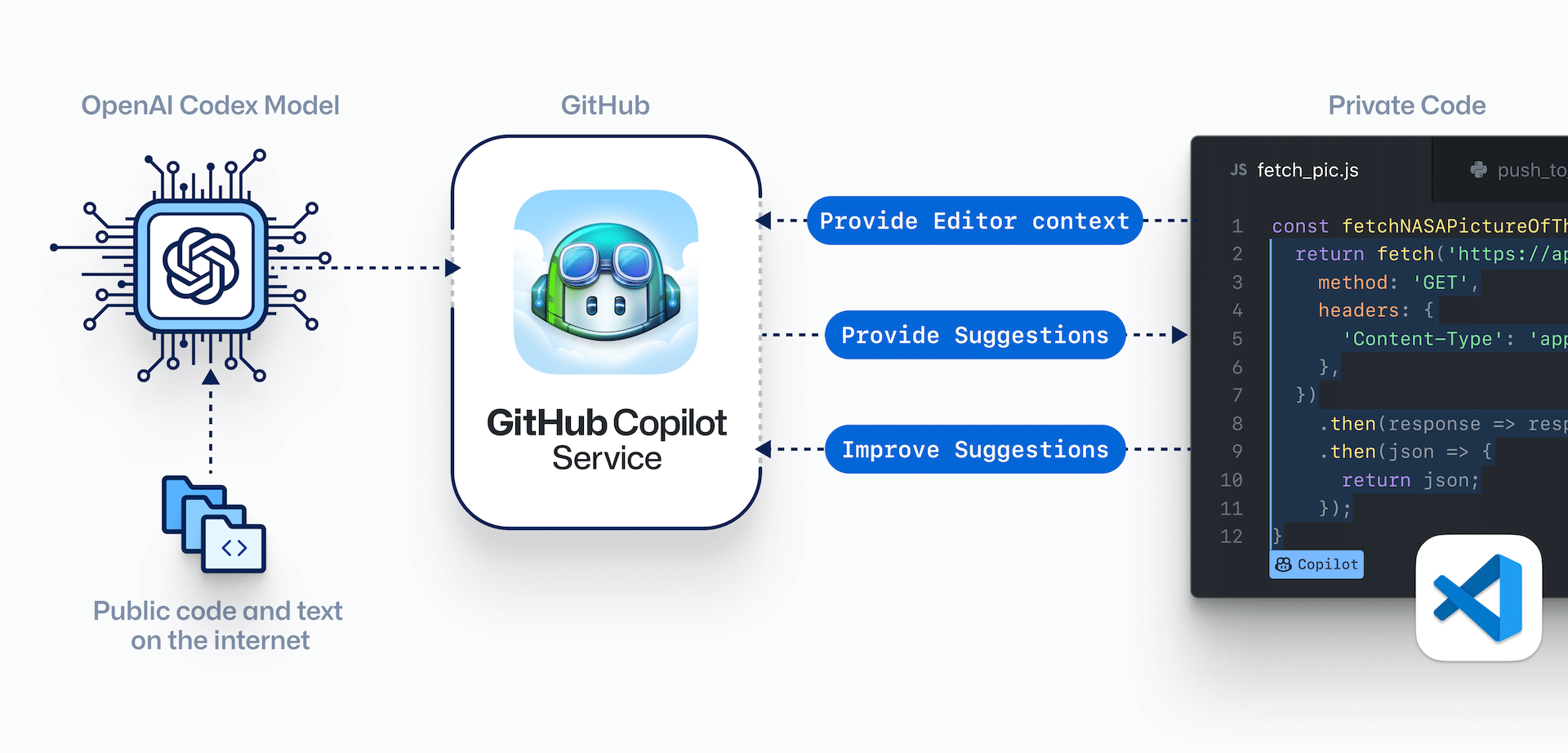

You may not be familiar yet with GitHub Copilot, which was launched by GitHub in collaboration with OpenAI in July 2021. The AI pair programmer powered by Codex suggests lines of code and functions based on the comments and code it reads in your project.

The model used by Copilot has been trained on the source code available in public GitHub repos, but as more and more people use it, Copilot will get smarter with time.

This article will go through how GitHub Copilot works and why you might want to use it to optimize your testing process.

How Copilot Works

Above is GitHub Copilot in action. You write the docstring or the comment, and it suggests a code snippet. In some cases, just the function name or a part of the function is enough for it to generate the rest of the code. The code is uniquely generated for you, and you own it.

Below is a high-level overview of how it works:

Copilot makes ten suggestions at a time. As you type more code, the suggestions will become more accurate.

How to Access Copilot

Copilot is undergoing a technical preview and is available to a limited number of developers. You can join the waitlist here.

The only IDE or editor currently supported by Copilot is Visual Studio Code. You would only need to install the Copilot extension and enter your GitHub credentials.

Why Use Copilot

The goal of Copilot is to increase an engineer’s productivity. Copilot can save you the trouble of writing repetitive code and can help you work faster by saving you the time spent searching for code syntax. It can also suggest code using third-party APIs or libraries. It supports Python, JavaScript, Ruby, TypeScript, and Go; it can also be useful in writing test cases.

Demo Application

This article uses a demo application with unit test cases written by Copilot. The functionality of each test will be written as a docstring, and Copilot will have about five seconds each time to generate code.

The application will be a basic API wrapper for the JSONPlaceholder API. The code will be written in Python. The API wrapper will have functions to make various HTTP requests to the available endpoint.

As you can see, most of the code was written by Copilot, including suggested endpoints. It also chose good function names and used the snake_case. The post method was able to predict the correct schema for the body of the request. However, it made a mistake. The route for the function get_comment() was incorrect. The correct route is /posts/1/comments or /comments?postId=1. Copilot suggested the route to be (self.url + '/posts/' + str(comment_id)).json() + '/comments'. It got the structure of the URL incorrect as well since it tried to append /comments after getting the JSON data.

Unit Tests

Now Copilot will write unit tests for the wrapper functions. This tests each of your functions in a standalone environment.

To avoid duplicate work, I will write a few testing functions rather than test all the GET request functions.

As you can see, again, most of the code was written by Copilot. The test functions were written in a separate file, and Copilot was able to correctly link the API wrapper.

Let’s discuss the code written for each function.

test_get_posts

Copilot got everything correct except for the key username, which doesn’t exist in the response object.

test_get_post

Again, Copilot added a check for username, although that key doesn’t exist.

test_get_comments

Copilot got this completely wrong. It did not pass the required parameter and somehow predicted the length of the response object to be 10. Additionally, if you look at the GIF, you will notice it stopped predicting after the ==. This could have caused a syntax error.

Now let’s look at the post, put, and delete methods.

Here is the code generated for each function.

test_make_post

For this function, I wrote test_make_post instead of test_create_post. Copilot ended up calling api.make_post() instead of api.create_post(), although api has no method called make_post(). It forgot to pass the user ID parameter as well, although it correctly predicted that the ID of the new post would be 101.

test_update_post

Copilot got everything correct except for the check for userID. The response object doesn’t contain any key called “userId”.

test_delete_post

The response object is empty, but Copilot made some unnecessary checks.

Better Tests

In all the above test functions, Copilot wrote the docstring and the code. For you to get more accurate results, it is better to write your own docstrings and function definitions. We will try to mimic a user’s behavior and write a couple of integration tests. Integration tests validate multiple tests together, helping you ensure a new feature doesn’t break an existing feature. When doing integration tests, we try to simulate real-time behavior. Below, we run an integration test to mimic a user’s behavior:

The code generated this time was more documented. Copilot basically suggested the same code snippet it suggested for each of the unit test functions, and instead of making a GET request to check if the posts had been created or updated, it used an assert statement on the response object.

Instead of using the entire code suggested by Copilot, you could use a part of it or make the docstring more extensive. Behavior-driven development (BDD) or Given-When-Then testing will help you write better docstrings.

Behavior-Driven Development

The idea is to break down your test into the following format:

- given: describe the current state of the world

- when: describe the behavior

- then: describe the expected behavior

In the case of the API wrapper, a test case using BDD could be as follows:

given I make a DELETE request to Deleted a Post

when The Post ID is Empty

then API wrapper should return an error

Now let’s try writing this as a docstring using Copilot.

Although Copilot added extra docstrings, it got the code correct. I didn’t specify the type of error in my docstring, so it checked for a ValueError, which makes sense.

Let’s try rewriting the unit test case for test_delete_post using BDD:

given I make a Delete request to Delete a Post

when the Post ID is successfully deleted

then API wrapper should return an empty object

In this case, it added the correct assert statement since we explicitly specified it.

Let’s write a BDD test case for the update request:

given I make an Update request with a Post ID

when the Post is successfully updated

then a Get request with Post ID should return updated object

In this case, the tester function was supposed to make a GET request to ensure that the post had been updated. However, the code generated by Copilot used assert statements on the assert statement. After I changed the first assert statement, it was able to predict the rest.

This just shows that Copilot is not perfect. You will have to verify that the code it generates is suitable for your use case.

Copilot’s Weaknesses and Strengths

By now, you must have already noticed some of Copilot’s weaknesses. In most cases, your docstrings have to be explicit. Since Copilot doesn’t have any context and can’t mimic user behavior, you will still have to think of edge cases to test and how to test them. The code Copilot generates will, in some cases, lead to syntax errors, so you will have to verify it.

Copilot also has its strengths. Among them is generating data sets.

Below is the docstring for the function:

1. Function to create 100 random users using faker library

2. Each user should have between 1 and 5 posts

3. The user and the post should be sent to the API via a post request

Faker is a Python library that generates fake data. It can be used to populate databases, get random data, or create fake user profiles. You can find the repo here.

Watch Copilot and Faker in action:

Notice how Copilot used max_chars 50 for the title and 200 for the body. It also chose a random number of posts per user and correctly associated the posts with the user.

There are some limitations, however. In some cases, it is hard to specify the schema. If you do not reference the API wrapper, Copilot adds email and dob to the schema as well. When I mentioned the schema in the docstring, it didn’t generate fake names.

Conclusion

Copilot can increase your productivity and save you time when writing repetitive code. You do need to closely monitor Copilot’s output in some cases. Although most of my time was spent writing docstrings for the functions, I did have to modify some of the code Copilot generated.

However, when testing multiple GET requests or similar functions, Copilot was able to generate most of the tests required. This means integrating Copilot into your workflow once it’s fully available could be well worth the effort.